Math Puzzle: Rich Get Richer?

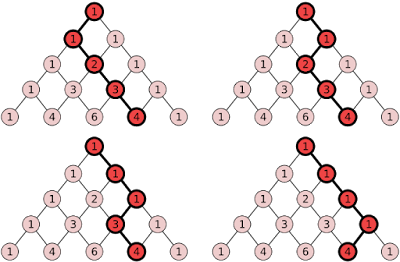

Today I'm going to talk about a math problem whose answer is simultaneously simple and unintuitive. Here is the problem statement: You have a urn that has $1$ red ball and $1$ blue ball. You repeatedly remove one ball from the urn randomly, then put two balls of the same color back into the urn. How does this system behave over time? Specifically, what is the probability that at some point the urn will contain exactly $r$ red balls and $b$ blue balls? If you want to see my solution, go ahead and expand the solution tab. Otherwise, you can try to figure it out for yourself. Also, if you have other interesting questions you want to ask about this system, feel free to post them in the comments below. Show/Hide Solution Intuitively, this seems like a "rich get richer" problem", in that the long term behavior of the system depends on how "lucky" we are in the early stages (i.e., how many red and blue balls we pick). For example, if we pick m...