Markov Chains and Baseball

In this blog post, I will give some general background info about Markov Chains, a mathematical structure that arises in Probability theory. I was first introduced to Markov Chains last year and I found them extremely interesting. I found them particularly interesting because they combine ideas from linear algebra, graph theory, and probability theory. Markov chains have applications in many real world situations, including sabermetrics and stock market analysis.

A Markov Chain can be thought of as a special type of graph or a non-deterministic finite automaton. Basically, that means that there are a set of states, and you can transition from a set of different states from any given state. The transitions are weighted by probability measures. There are lot's of interesting question you can ask about Markov chains - my favorite is the random walk type problems. Markov chains can be used to model a variety of real world situations, including baseball simulations, stock market behavior, and physical processes.

Each cell $(i,j)$ in the transition matrix represents the probability of transitioning to j from i. Note that the transition probabilities are only dependent on the current state, not on the previously visited states.

Here is a toy problem which can be modeled with Markov chains:

This process can be modeled with the following transition matrix:

$$ \begin{bmatrix} p & 1- p \\ q & 1-q \end{bmatrix} $$

And we could numerically solve this problem by working with this matrix in ways that I'm not going to cover in this post. Instead, I will show you how to solve this problem analytically just for fun.

First, notice that $f(0)=1$, because we start by flipping Coin 1 by definition. Further, we can say

$$f(n)=pf(n−1)+q(1−f(n−1))$$

To evaluate $ \lim_{n\rightarrow \infty} f(n) $, I must first assume that the limit converges, which is a reasonable assumption as long as $0<p,q<1$. Let x be that limit. More formally,

$$ \lim_{n \rightarrow \infty} f(n) = x = \lim_{n \rightarrow \infty} f(n-1) $$

Now, we can solve an equation for the desired limit:

$$ x=p \cdot x+q \cdot (1−x) $$

$$ x=\frac{q}{1+q−p} $$

While this limit does not give us any information about the case when p = 1 and q = 0, the solution for that case is trivial from the context of the problem. It makes sense that the recurrence relation fails here because it doesnt know about the base case (i.e., the starting coin), and that is the determining factor (the starting coin doesn't actually matter for other probabilities, however).

Markov Chains are a really cool mathematical structure, and they have been used to model baseball games. Each inning can be represented as a Markov Chain, where the states consist of all the combinations of base runners and outs. At any given time, there are either 0, 1,or 2 outs, and there are 8 possible ways for base runners to be on base for a total of 24 possible states. Thus, it would require a 24 x 24 transition matrix to model this scenario. This approach is a mathematical idealization, but it is somewhat limited because it doesn't distinguish between different base runners - it only models whether or not there is a base runner on any given base.

Markov Chains are one particular area of probability theory that I find particularly interesting. I really like them because they combine ideas from many different domains (including linear algebra, probability theory, graph theory, and automata theory). I also really like them because some of the most interesting math problems I've seen have been of the random walk variety, and these structures can be used to help solve these types of problems.

A Markov Chain can be thought of as a special type of graph or a non-deterministic finite automaton. Basically, that means that there are a set of states, and you can transition from a set of different states from any given state. The transitions are weighted by probability measures. There are lot's of interesting question you can ask about Markov chains - my favorite is the random walk type problems. Markov chains can be used to model a variety of real world situations, including baseball simulations, stock market behavior, and physical processes.

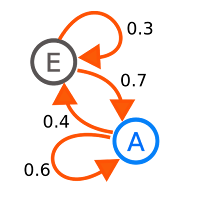

Here is a simple example of a 2 state Markov chain, where the edges between states represent the probability of transitioning to to the that state. This Markov chain can be compactly represented as a transition matrix.

$$ \begin{bmatrix} 0.3 & 0.7 \\ 0.4 & 0.6 \end{bmatrix} $$

Here is a toy problem which can be modeled with Markov chains:

You have 2 biased coins. Coin 1 returns heads with probability $p$ (and tails otherwise), and Coin 2 returns heads with probability $q$ (and tails otherwise). You start by flipping Coin 1. After each flip, if you get a heads, then you flip Coin 1, and if you get tails, then you flip Coin 2. Let $f(n)$ be the probability that the $n^{th}$ flip is heads. Find $ \lim_{n \rightarrow \infty} f(n) $

This process can be modeled with the following transition matrix:

$$ \begin{bmatrix} p & 1- p \\ q & 1-q \end{bmatrix} $$

And we could numerically solve this problem by working with this matrix in ways that I'm not going to cover in this post. Instead, I will show you how to solve this problem analytically just for fun.

First, notice that $f(0)=1$, because we start by flipping Coin 1 by definition. Further, we can say

$$f(n)=pf(n−1)+q(1−f(n−1))$$

To evaluate $ \lim_{n\rightarrow \infty} f(n) $, I must first assume that the limit converges, which is a reasonable assumption as long as $0<p,q<1$. Let x be that limit. More formally,

$$ \lim_{n \rightarrow \infty} f(n) = x = \lim_{n \rightarrow \infty} f(n-1) $$

Now, we can solve an equation for the desired limit:

$$ x=p \cdot x+q \cdot (1−x) $$

$$ x=\frac{q}{1+q−p} $$

While this limit does not give us any information about the case when p = 1 and q = 0, the solution for that case is trivial from the context of the problem. It makes sense that the recurrence relation fails here because it doesnt know about the base case (i.e., the starting coin), and that is the determining factor (the starting coin doesn't actually matter for other probabilities, however).

Markov Chains are a really cool mathematical structure, and they have been used to model baseball games. Each inning can be represented as a Markov Chain, where the states consist of all the combinations of base runners and outs. At any given time, there are either 0, 1,or 2 outs, and there are 8 possible ways for base runners to be on base for a total of 24 possible states. Thus, it would require a 24 x 24 transition matrix to model this scenario. This approach is a mathematical idealization, but it is somewhat limited because it doesn't distinguish between different base runners - it only models whether or not there is a base runner on any given base.

Markov Chains are one particular area of probability theory that I find particularly interesting. I really like them because they combine ideas from many different domains (including linear algebra, probability theory, graph theory, and automata theory). I also really like them because some of the most interesting math problems I've seen have been of the random walk variety, and these structures can be used to help solve these types of problems.

Comments

Post a Comment